senior developer agents

Code review is about more than checking for mistakes; it is about ensuring simplicity.

When you're responsible for a junior developer, there's an early, crucial milestone: they know when to ask for help. Before this milestone, every task must be carefully curated. Each day of radio silence — be it from embarrassment or enthusiasm — is a cause for concern.

Achieving this milestone can be difficult. It requires the developer to intuit the difference between forward motion and progress. It is, however, necessary; until they do, the developer will remain a drain on their team's resources.

And this is what we've seen in the first generation of developer agents. Consider Devin, an "AI developer." Everyone I know who's evaluated it has reported the same thing: armed with a prompt, a search engine, and boundless yes-and enthusiasm, it pushes tirelessly forward. Successful outcomes require problems to be carefully scoped, and solutions to be carefully reviewed. It is, in other words, an intern simulator.

In this post, we will be exploring a few different topics. The first is the nature of seniority in software development. The second is how agents can incrementally achieve that seniority. Lastly, we will consider our own future: if there are senior developer agents, how will we spend our time?

seniority and simplicity

In this newsletter, simplicity is defined as a fitness between content and expectations. There are two ways to accomplish this: we can shape the content, or we can shape the reader's expectations.

Whenever we explain our software, there are some natural orderings: class before method, interface before implementation, name before referent. By changing one, we change expectations for the other. This is the essence of software design.

This is why designers often fixate on the things that are explained first: the project's purpose, the architectural diagrams, and the names of various components and sub-components. These are the commanding heights of our software; they shape everything that follows.

A designer, then, is someone whose work has explanatory power for the work of others. And as a rule, the more explanatory the work, the more experienced the worker. For our purposes, seniority is just another word for ascending the explanatory chain.

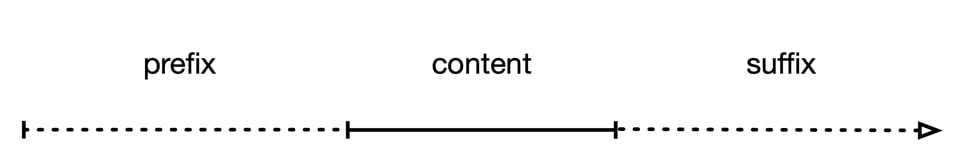

The impact of this work is, sometimes, easy to overlook. This is because there are three parts to every explanation, but only one is said aloud:

The prefix is what everyone already knows, the suffix is all tomorrow's explanations, and the content is everything in between. To understand each part, let's consider a pull request.

In addition to the diffs, each pull request usually includes an explanation. Often, this will link to external texts — bug reports, conversations on Slack, other pull requests — to explain why the change is being made. In literary theory, these are called paratexts; they "surround, shape, support, and provide context"1 for the changes being made.

Most paratexts, however, tend to be left unreferenced. In an intra-team code review, everyone involved will have received the same onboarding, attended the same meetings, and participated in the same discussions. This shared knowledge has explanatory power. To judge the simplicity of a pull request, we must first understand its prefix.

But the prefix, on its own, is not enough. Consider how, in a code review, there are two types of negative feedback. The first is simple: this doesn't work. The second is more nuanced: this works, but won't last.

Our industry has a number of terms for this. We talk about incurring technical debt: our work today will have to be redone in the future. We talk about a lack of extensibility: our work today doesn't provide a foundation for the future. We talk about losing degrees of freedom: our work today precludes something we want to do in the future.

These are different ways of saying the same thing: this content doesn't fit its suffix. It has no explanatory power for the changes yet to come. It sets expectations that will, eventually, have to be unwound.

A code review, then, is about more than checking for mistakes. It is about ensuring simplicity. There should be a fitness between prefix, content, and suffix; each should help to explain the next.

And this is the core responsibility of a senior developer: to ensure simplicity. Within a project, this requires a strong grasp of its prefix and suffix. Across all projects, this requires an internal metric for explanatory power: the degree to which one piece of content, presented first, makes another less surprising.

This metric is not some hand-wavy abstraction. In information theory, surprisal — a mismatch between expectation and content — is a synonym for entropy. It can be measured in bits. If we can model our audience's expectations, then we can create an explanatory metric.

Intuitively, this seems like a problem well-suited to a language model. And, having given it a bit more thought, I think this intuition stands. Language models aren't an off-the-shelf solution2, but I'm optimistic that we can use them to create a passable explanatory metric.

It's worth noting, however, that a better explanatory metric is not simply more accurate. Some explanations are more demanding than others. The explanatory relationship between our software's business model and product design is likely complex, requiring careful interpretation by the audience. By comparison, we'd expect the relationship between a function's name and body to be much simpler. And if it isn't, then the fault lies with the code, not the audience.

A middling explanatory metric, then, would only be useful for judging the middle (and lower) layers of our software's design. But this is fine. As we'll see, even a limited metric can get us past that early, crucial milestone. And each time we improve the metric, our agents will become a little more senior.

But first, if we want to measure the prefix's explanatory power, then it cannot be left unsaid. It must be reified.

reifying the prefix

In previous posts, our primary analytic framework has been the structure. Each structure is a graph, where each vertex is a subset of our codebase3, and each edge is a relationship. And unless a vertex represents individual lines of code, it will contain its own sub-structure.

For the purposes of this post, we will treat each structure as a collection of short, declarative sentences. Each sentence consists of two components, and a relationship:

- The storage proxy is a router and read-through cache for the storage service.

- The lexer converts text into tokens for the parser.

- Each value added by enqueue can be removed, in order, by dequeue.

Together, these structures and sub-structures comprise our software's prefix. Every explanation of our software begins (implicitly) at the root structure, where our software is a single vertex in a larger system. From there, our explanation can descend until it reaches the relevant code.4

These structures, then, represent our explanatory topology. We expect each parent to have a high degree of explanatory power for its children: it's difficult to explain pop without first explaining Stack. Likewise, we expect siblings to have explanatory power: pop and push are typically explained together.

Conversely, if two nodes lack a parent or sibling relationship, then they should have little explanatory power for each other. Each can be understood, or changed, in isolation. Coupling, after all, is just another word for co-explanation.

And so, if we were to write out the sentences of our software's structures, we could use the explanatory metric to validate them. The higher-level structures will be the hardest to validate, and likely require human review. But this is fine; high-level structures are fewer in number, slower to change, and thus long-standing. They should already be widely understood.

Everywhere else, we can lean on our tooling. By omitting each sentence in turn, we can measure its individual explanatory power. We can highlight the weakest sentences, along with any code that remains surprising. And then we can iterate, reshaping the prefix and code until they fit.

Once we're done, we will have a living design document. Each time the code changes, we can check for incipient staleness in our prefix. And whenever we find it, we will be forced to make a choice: which is at fault? The prefix, or the code?

judging the content

Sometimes, this question has a simple answer. Tasks for junior developers — human or otherwise — shouldn't require much software design. Their changes, then, should preserve the prefix's explanatory power. If they don't, then something's gone awry; it's time to ask for help.

There are a few reasons this might happen:

- The task is ill-conceived. What we're trying to do doesn't fit our software's design; we need to try something different.

- The design is ill-conceived. What we're trying to do doesn't fit our software's design; we need a different design.

- The developer can't make it work. The task is too demanding; it needs to be done by someone more experienced.

This last case is relatively straightforward: we reassign the task. The other two cases, unfortunately, are a bit more challenging; to start, we need to figure out which one we're dealing with.

Here, again, we can turn to the reified prefix. How much of our prefix is undermined by our changes? And, if we update the prefix to match our changes, how much does that undermine the surrounding code? This is the impact of our pull request. The higher-impact the changes, the more likely they are to be ill-conceived.

A reified prefix, then, plays two roles. For junior developers, it's a set of guardrails. If they ever bump up against its limitations, then it's time to ask for help. For senior developers, it's a bicycle for their software's design space.

Used as a guardrail, a prefix will make agents a tiny bit more senior. It may even, on its own, make them net-positive contributors. If an agent can quickly recognize, and explain, its inability to create a low-impact pull request, there's real value in letting it try.

And from there, we can let the agent ride the bicycle, and see what happens. We can allow it to propose higher-impact changes, and if they're any good, we can continue to raise that threshold. Whenever the agent gets a little more senior, we get a little more value.

scrying the suffix

Let's imagine, for the moment, that we had an oracle. While the oracle can't tell us exactly what we'll be working on, it can provide a representative sample of possible future tasks. With this in hand, measuring the fitness between content and suffix would become a straightforward, albeit expensive, Monte Carlo process.

First, we take large sample of future tasks. Then we try to solve each task, both with and without our proposed changes. Finally, we compare their complexity; did our changes make the task easier, or harder? If, on average, it makes things easier, then our content has explanatory power for the suffix.

Unfortunately, we don't have any oracles. Our roadmap can only offer a single, fragile narrative, and what we need is a garden of forking paths. When evaluating a design choice, we must consider both what it enables and what it precludes. We need to ensure that the paths not followed, our counterfactuals, aren't something we'll later regret.

And this, by itself, is a more tractable problem. We need something akin to QuickCheck which, given a failing input for a test, will try to reduce it down to its minimal form. In our case, we want minimal counterfactuals: simple tasks which, if we make the proposed changes, become significantly more complex.

Once generated, they can be added to our review process. In our imagined future, each pull request will have four parts: the description, the codebase diffs, the prefix diffs, and the counterfactuals. And then, finally, a person must review the changes.

the future of software development is software design

There are two ways to speed up software development: we can make code easier to generate, or we can make code easier to evaluate. Recently, our industry's attention has been on the former; it is, after all, generative AI.

But generation isn't the problem; language models can, and will, drown you in code. The challenge is ensuring the code is worth the time it takes to evaluate. And so, unless the quality of your code is improving day-over-day, it's worth considering the other side of that equation.

In this post, I've suggested a few different ways that evaluation can be improved. While I think all of them are promising, the only part that's load-bearing is the explanatory metric. If we have that, then agents can begin to evaluate their own output. They can iterate, and improve. And whenever they hit a fixed point, they can ask a human to enter the loop.

And this, I think, is a plausible future for software development. If agents are sufficiently inexpensive, then every task can be delegated by default. The agent will make as much progress as it can, and then report back. We will, by necessity, develop an intuition for why they failed. Does the task need to change? The design? Or do we simply need to implement it ourselves?

We will, in other words, all become lead developers. And this may not be a smooth transition. Today, these skills are treated as inherently tacit; they cannot be taught, only learned. We rely on the fact some people, after years of hands-on experience, will demonstrate the necessary aptitudes.

In this imagined future, we'd need a more direct path. And this, I hope, is where newer facets of the review process — the prefix, the counterfactuals, and so on — would come into play. By giving voice to the unspoken parts of each explanation, we make it easier for junior developers to participate.

Looking to my own future, this is something I'd enjoy building. I have, for the past few years, been working on a book about software design. I'm excited by the possibility of putting those ideas into practice. If you have a role matching that description, drop me a line. I'd be happy to chat.

-

Consalvo 2007, p. 182 ↩

-

On the surface, there seems to be a strong resemblance between a language model's perplexity and human surprisal. But the expectations we derive from a text are not just from what it says, but from what it implies. Perplexity may capture some surface level implications, but it won't get us all the way there. ↩

-

More generally, vertices in a structure can represent any abstract concept — datatypes, actors, actions, and so on — but for the purposes of this post, they represent contiguous regions of code. ↩

-

In practice, these paths may be a little indirect. In an MVC web application, for instance, reaching the controller requires a detour through the model. ↩

-

Hey Zach, I enjoyed this latest post!

I appreciate how you're grappling with emerging AI tools and applying your views to your ongoing work in explaining software.

Your first 3 graphs are especially compelling to me. For anyone who has worked anywhere around a developer team that includes juniors, this is relatable and engaging. And the way you quickly bring us up to speed on LLM oriented software tools is great.

Since the third graph brings the reader up to date on a specific scene, it seems to me your 3 intro graphs are doing a lot of efficient and smart work, in terms of hooking the reader.

Your structure for training an "intern simulator" comes across as well thought out and high potential.

At the top level, though, I do wonder if your current approach will be too-soon obsoleted?

The "intern simulator" is one thing. Discovering in the year 2028 that we no longer need to build software in any traditional sense of the term... is another thing!

Looking at your view, "If there are senior developer agents, how will we spend our time?" -> "We will, in other words, all become lead developers": I wonder if you're begging the question. If not, then I wonder how long, really, your described reality will last as a window of time -- will it last long enough to be relevant for career planning and such?

Post-it notes: * I've always found the topic of training junior engineers to be super compelling. I have fond memories of successes in this area -- and poignant regrets around failures. But just because this is compelling for humans doesn't mean it's the proper metaphor for machine tooling. * The classic (possibly Henry Ford) quote about "faster horses". Kind of like, "If I had asked engineers what they wanted, they would have asked for sleeker tools (or an updated tooling methodology)". * If The Machine is getting so much smarter so quickly, then my primary wish is not to make it easier for engineers to build things that other humans use to do things. Rather, I just want The Machine to do things. This is some kind of riff on the Law of Demeter.

If I'm being too optimistic about "the year 2028", then that's a concrete mistake I'm making and I'm interested to see how I'm wrong. E.g., I recognize a valid issue is the limits of LLMs versus the much more ambitious dream of AGI.

But anyways the bottom line for me is that there's writing in here that I think is some of your best and most compelling, at least for this N=1 reader sample size.

Thanks for sharing!

-

We talk about a lack of extensibility: our work today doesn't provide a foundation for the future. We talk about losing degrees of freedom: our work today precludes something we want to do in the future.I'm not clear on what distinction is being made between these two types of critics? Is "loss of freedom" just a more extreme version of "lack of extensibility"? Or is something more specific being referenced in this statement?

Add a comment: